How Will AI Transform Customer Support? [Ft John Wang, Assembled]

I think twenty thirty, you will see very few humans in frontline support. There's a million ways to go. How do you think about the the tech and which ones to to go after first? AI has made a lot of things better, but I think it's actually caused probably the whole industry to take on more than it can chew. There are people who are literally like, they've become so good at doing support and they're like product experts now that they are the ones writing the support articles that then fuel the AI agents. Humans aren't going away, but their job might change. I would imagine that at some point, we're gonna have humans that are just the fact checkers for the AI. Yeah. Right? To see when it's going off the rails. What are those flags that can tell someone that this might be going off the rails? Actually, a lot of different things that you can implement. They fall into a different a few different buckets. One of them is Alright. We are here with John Wang, cofounder and CTO of Assembled. Welcome. Thank you. Appreciate it. I'm happy to be here. Great. Okay. So let's get right into it. Assembled is a platform for customer service Yeah. With AI at the core. Yeah. But not always, because you guys started in twenty eighteen. No. We started and we built out a system that helps you figure out how many people you need at any given time. So when we started, it was actually you know, there's only people, really, that can solve your questions. And the problem was when you got to a thousand people or, you know, five thousand people on your support team, you can't just have them all go nine to five. You need specialization, and you need to figure out how many people do I need at this specialization at any particular time, which is a very difficult problem when you do it at scale. So then LLMs hit the scene. Yeah. What happens? Like, what's the reaction at that point? Is it an oh shit, or is it a oh, this is our savior? Honestly, both. When we first saw it, it was it was like the, you know, the five stages of grief where it's like, oh my god. Our company's gone. And then we were like, hold on. Wait. We can actually we're in a really good position to actually make use of these LMs. Because we had spent four or five years up to that point building out super enterprise customers like Robinhood and Stripe and Salesforce and DoorDash. And, like, we had Fortune five hundred companies and had access to all these support teams. So then we were like, wait. We should be the people who help you build LLMs into your support team. And that's what we ended up doing. What was the internal debate like? And was it how big were you guys at that point, employee wise? Yeah. We were around sixty folks at that Enough people that there's going to be dissent no matter what the goal is, right, whatever the decision is. Totally. Like, if you reme think back of it, what were the strongest cases one way or the other? Well, there's a lot of strong reactions, especially from the engineering team. I think at the time, we already felt like we were so bootstrapped on engineering capacity and, like, sales capacity and, like, capacity everywhere because we were, like, selling all these deals. And everyone was like, why would we work on this LLM stuff? It's not even you know, there's no clear path for productization here while we have, like, all these problems at home. And so there's a big camp here, and then there was a big camp of people who are like actually, maybe a smaller camp of people who are like, no. We should go do this. And one engineer actually built out a hackathon project that was like a Slack bot that answered questions for you that ended up being in converting into our first AI product. So it was a hackathon project that, like, got you guys more comfortable with the idea that, like, we can implement this into the larger product and be the people that bring this to market. Totally. Yeah. And we may have poked a little bit at, like, making sure that this hackathon project does learn, you know, like Right. Enough hackathon projects come along that use LMs, but we were very excited because because of all the, like, customers that we already had And all of the distribution that we had. And I think our approach is now very different than a AI native startup where you just don't know as much about support, but I think we come from a very, very support heavy background. Right? And and that actually changes the product a lot. So okay, you're doing all the scheduling, and you're you're helping large enterprise level companies do that. Now you still, what, start building this product on the side as a customer support agent in the form of what, chat at first, or, like, not voice yet? That wasn't out. Yeah. It was not voice. We actually built our first product was a agent copilot, and then we built a chatbot, and then we built a voice bot. And the way that we did it was interest I don't know if I would do it again this way, but I there were good there were ups and downs to it, actually. So myself and two other engineers and one business person kinda, like, broke off, and we, like, put ourselves into a room that we called the dungeon. And we just hacked there for, like, months and months and months. So we didn't, like, come out. We were like we had, like, these two speakers that were blasting, like, Martin Garrix. We just, like, built the product from there. But that caused a lot of tension in our company because they were like, why is our CTO, like, doing this on on Slack. Yeah. Yeah. And and, like, that was part of the tension at the company of, like, should we do this new LLM thing, or should we kind of continue down our enterprise route? Yeah. So the you had road map plans for where the product was gonna go, and this kind of bifurcated. This this was like the point where you had to decide are we going this way or not. I was about to say what a kind of blessing it is to have a company that's established enough that you can peel that level of of executive off Yeah. To go do this for someone, but it sounds like it still was a pain point even with the level of support that you guys had. I think it's always a pain point when a leader or, like, someone influential at the company decides to do something that's, like, almost completely different, especially when there's, like, a lot of stuff going on. Right? But I do think we were in a good enough spot where it wasn't like, hey. You know, the company's gonna collapse. It was just, like, more paint gets passed around. Was there conversations around this potentially opening up more go to market approaches with different ICPs, smaller companies? Like, I don't know if you wanna build this thing and pass it off to the massive company to test it. Totally. Right? So did it open up ideas of going to, you know, mid market and below? We definitely experimented. We were like, hey. Can we make this thing entirely self serve? Because our our first product was entirely enterprise. And, like, I think there's this big flip during when when ChatGPT came out when, like, everyone who's consumer wants to be enterprise and everyone who's enterprise want to be consumer. And it was like, hey. Now is the time to just rewrite everything. So we tried that, and we're like, oh, crap. We're not actually that good of a consumer company. We, like, know how to do sales, and we know how to do all this go to market stuff. So what we ended up doing was we actually did go much lower market. Like, if if you consider our kind of workforce management existing product as enterprise, we actually now target mid market as for our AI products. But I it was not as, like you know, it wasn't like, literally, you're gonna go self serve, like, a one person one person shops. So when you walked out of that room three months later, however long it was, what did you have to show for it? Like, did you actually have a working product at that point? Totally. We had a working product, and we had a few early experimental customers, And we had a lot of good traction. We we basically thought of it as, like, y c. I had done y c back in winter fourteen, so honestly, a really long time ago at this point. And I remember a lot of things about it, but the main thing was, like, this kind of, like, hype cycle, like, what you're doing on a day to day. And you go and you're like we had a little board, a whiteboard, and we would, like, physically plot. Like, here's where our customer count is. And this was back when we were, like, building ecommerce stuff back in twenty fourteen, and then I was like, we should do the same thing. Because when you physically plot your usage numbers, that's very different than, like, looking at a graph. You actually have to, like, actively engage and be like, oh, our usage number either did not go up or yes, it went up a lot. Right. And it gives you a different perspective on it. Okay. So you guys walk out of this room, you got this product now. You start going you already have a couple customers on it. They were probably small, like mid market Yep. Level. Yeah. Does this change the because like, with LLMs coming out, they're I I equate it back to like early days of Internet. There's probably like SEO agencies that were like launching at the beginning of the Internet going like, you need to use us for twelve months. Yeah. But like, make me a blog. Totally. Was there a point where you saw the sales cycle for what you're selling on the on the AI side ramp up because people are just getting more comfortable with AI? Yes. And, actually, we've been through a few different cycles already on the AI front. I think the first cycle was a lot of a lot of people just being like, what is this? I don't know what this is. Then there was a period of time I I wanna say it was, like, late twenty twenty three, maybe early twenty twenty four, where people were like, oh, like, my executives are telling me I really need to do AI, so I'm gonna buy literally anything that has AI on it. And the sales cycle ramped up really quickly. Then it was like, wait. That's dumb. Hey. I actually need to buy something reasonable here. And then they and then we saw a sales spike for, hey. If you have a differentiated approach on AI, not just, like, sticking AI in front of everything, that's when you get the sales cycle. And now I think we're in a place where most people understand what AI is. Most people are looking for kind of how do how do you best integrate with our systems? Who's going to help me get there the best? And who's gonna provide nuance into how we actually add AI into how we work day to day? Do you find that more customers are coming in and saying, what's the impact here? Like, what do we expect what does success look like? Because I I feel like, well, at least what we see at Cadre on our side is we consult. We have a lot of companies that are private equity backed. We know that are what's happening in the boardroom is they're saying every time, like, what's your AI strategy? And it's not a really great answer to be like, well, my marketing person's got a couple tools and my, you know, product person has some tools Yeah. Without having a strategy of of, alright, if we implement this, it's going to save here or there, and we expect to be able to maybe maybe it's grow without this much headcount next year, whatever it is. Do you see more of that happening as as we've gotten more mature from, like, just anybody going and buying it to probably a more refined sales cycle? I think definitely. I think people now expect certain baseline levels of what what's deflection and resolution rate can you actually do. That's anywhere from, like, the low end being, like, forty, fifty percent all the way to, like, eighty or ninety percent resolution rate depending on your, like, these types of inquiries that come in. Right? And I think people are much more specific about, like, I wanna be hitting this, and I don't wanna have hallucinations. Yes. I don't want to, you know, have my customer that, you know, spends a million dollars with me go through the whole, like, rigmarole of, like, please escalate to a human. What's your problem? Please escalate to you you know, like, they are much more discerning now when you buy software. So, okay, LLMs come out, you go into you probably walked out of that room with a chat feature, not voice. Yeah. Voice starts hitting the scene, and all these different platforms are are doing voice. Was there another conversation of, do we get into this or not? It was less a conversation and more just like we built a demo, and we built a really freaking awesome duo. And I I think this is how our company more operates is is instead of, like, a bunch of conversation, it's just, like, someone who has a lot of wherewithal comes in, and it's just like, I'm just gonna try it. Yeah. And, actually, we built it off of GPT real time. And so it was full speech to speech, and this was pretty early on in, like, twenty twenty four sometime. And it was such an amazing experience where you just, like, call the phone. But the problem was we started, like, implementing this and productionalizing it with a bunch of people, and we realized the real time speech to speech just does not hold up on our enterprise stress. And then we actually had to go build out the whole, you know, speech to text, text to speech pipeline. Dig in a little deeper. What's the enterprise stress that is causing it to fail? Let's say that you call your car wash. It was a real one of our real customers, like, runs runs and operates, like, hundreds of car washes. And you're like and you start talking in Spanish about having to cancel your car wash membership because you bought it from this other website, and it didn't quite have the same membership specs as you expected on this other website. And the enterprise like, what might happen with a normal chatbot is like, oh, let me pull out some articles, and let me see if this is there. Right? And you could pull out an article quite quite frequently. And, like, in this particular case, there's probably no article Right. That tells you this. Right. So you're going to either hallucinate and be like, hey. Like, blah blah blah blah blah and, like, complete, you know, junk nonsense. Or you can be like, I don't know. Or in the case where, like, what enterprises actually want is like, hey. This is something that needs to get escalated to human. I want these three pieces of information from you to give me the best possible handoff to the right agent, like, not just a normal agent, but, like, an agent who specializes in memberships because this person is buying a membership, is a paying customer, and has intents to buy or do something. Right? That should route me to the right person. Now that is not something that just comes out of the box with GPT Realtime, and you actually need to build that on top. One of the things that I thought was a really interesting use case that you've described is being able to route it to the right agent for that problem. Yeah. How is AI getting that information? Is that a admin level, you know, in the platform saying, John's the best person for this, or is it just listening and learning as to which agents handle certain things the best? I think the best AIs do both. Yeah. Because I think that on one hand, there's a lot of context in people's brains that actually is very, very helpful. That's why humans do so well in support because they just, like, soak up all this context. Right? But on the other hand, AI systems do well when you can look over a bunch of data and you can, like, ingest it, or if you can run a system and start ingesting memories and, like, extra context about a person. And so what we do is we actually do both. Right? We pull in information about, like, who who are good agents to do x y z, and we also have data that has come from admins who are like, hey. This person has these skills. And you can actually combine them really well too, because your AI can be like, I think Bob is really good at speaking Japanese. I don't know. Like, can you confirm that? Or Bob, can you confirm that? And that and that makes the system a lot better than just either guessing or having to wait on a human to give you all the information. When you're thinking about your road map for the tech side of this, I mean, there's almost endless ways to go. Right? I mean, you could so, you know, does it is it hard to plan? Because in my mind, I'm going, man, you just what you just said goes, well, why don't we have a scorecard? And maybe you guys already do this, but, like, wouldn't a sales leader want a scorecard of of how everybody's doing across the board? And wouldn't they wanna know who you know, just there's a million ways to go. How do you think about the the tech and which ones to to go after first? Yeah. It's a great question. And honestly, it's one of those things that AI has made a lot of things better, but I think it's actually caused probably the whole industry to take on more than it can chew. Because, actually, the hard part isn't, like, the coding part. It's like the right after you've coded it and built it and it's in production and you have to, like, launch it to a user and the user's like, I have this config that's different. And we've definitely fallen victim to chewing off a lot more than we we could have done, but I think now we are come coming back into, we have a few different areas where we really believe that we can provide a lot of value, and we're focusing on those. One of them is voice because we think that there's not very many people who do do a really good support voice system. One of them is in this kind of, like, holistic understanding of when you should go to a human versus when you should go to an AI. I think a lot of the kind of, like, new AI startups that do support, they just that automation number, they're trying to jack it up as high as freaking possible. Right? And that's not actually what most people want, right, as to to what you said earlier. Yeah. The point I had, we were talking off camera earlier, I have a there's a company that I'm close with that tried to implement voice agents to handle inbound calls for existing patients. It was health care, so it's existing patients. A lot of times it would be like refills or something like that it's gonna handle, which should be very easy. They could not get this thing from an existing this wasn't like they were using retail or something like, they were using existing platform built for this with SOC two and HIPAA and everything, and they could not get it to not say the person's first and last name every time. Something so simple is like, just say the first name, and they're like, no problem, Bill Smith. It's like, You know? So there's that, and then, like, they couldn't tell between twins or just little things that you're like, man, how has this part not gotten figured out yet if you're on the market? But I think to your point, it's very easy to roll out features now whether those are working. That's like rolling out a custom GPT. No. It's gotta work at probably ninety nine percent accuracy before someone actually starts using it. First time you get a wrong answer, you're like, this thing sucks. Let's move on. I think the entire industry is obsessed with these feature build outs because they're easier to, like, roll out and less obsessed well, I I depends on the company, but less obsessed overall with providing really good high quality, like, basics, like the fundamentals. You know? It's like if you go talk to anyone who plays baseball and you talk to, like, their dad or their grandpa, you know what they're gonna say. It's like, you gotta focus on your fundamentals, right, your grip, and, like, the way you're the way you're looking. And I think that is true also of AI in a lot of ways because we have a lot of really difficult questions that come in. And, actually, how long it takes between you stopping in a conversation and and the AI starting or, like, how you answer a slightly nuanced question or kind of, like, not hanging up in the middle of things. Like, that has a huge, huge impact on the end result. And then you've got instances where someone calls in with something that definitely requires human empathy. And Yeah. So you you probably need to train it to say, do not try to answer this question. This is one to hand off. A hundred percent. We had this we we actually have a customer where they're an ecommerce customer. They sell women's garments, and they had a case where someone was like, hey. Can I extend my return window? And I'm so thankful that this person was like, the reason why is I have a domestic violence case in the court right now, and I've been back and forth between the hospital and the courts, and I haven't been able to think about this return. And I just need, like, a five day extension. Thankfully, our system was, like, flagged this and was like, hey. This needs to go to an agent immediately. And human agent. Not yeah. AI. And I think that's also one of those cases where it's like, yeah. That's not something you want your AI handling. What an egregious use of AI to try to empathize with that person and be like, I'm so sorry. Like, mean right? So so you guys were prepared for that Yes. That type of scenario. Yeah. That's the fine tuning component of just getting that right. Did that happen before you like, did you think about that human empathy side of it before it went to market, or was that something that came about in just learning and being like, oh shit, this is A use case came up, and like, we gotta figure this part out. It's both a positive and a negative for us, because we've definitely thought of that beforehand, and it meant that our we were a lot more cautious, actually, to begin with. And now we actually have a tuning mechanism as to how cautious you should be. Do you wanna be incredibly cautious, or you wanna just we call it containment focus, which is just like, you know, you you wanna give as many AI answers as possible. But we generally, and most of our customers tend to be much more, like, on the cautious side because we don't wanna be the ones who are providing a terrible human experience and back and forth when, you know, we all know what it's like to have bad support. And I would imagine that a lot of these companies, they're not just putting this in and going, alright. Fire five people. Right? It's like there is a they're figuring out, hey. Did these people move to another part of the organization? But I would imagine there's a time period where it's the, I'd rather have these people sitting there with nothing to do while AI is answering the calls, and be the backup in case it flows through. Yeah. Because like you said, there's probably a lot of promises out there when it comes to how much this is gonna handle versus I think there was a study that, like, seventy nine percent of people grew more frustrated with the the chatbot or the AI, and eighty percent of them were like, why ought talk to a human anyway? So it was the the use. I think there's this really I've thought a lot about what what are humans gonna do, and I think, actually, something that people might not know is that most support teams already have built in layers of escalation. So there's, like, tier one support, which oftentimes is the stuff that you like, when you call and you get an Indian or Philippine call center, that's often tier one support and is very, very cheap. And then but that's not where it stops. There's, like, multiple tiers of escalations above that. And the tiers of escalation actually like, you basically are thinking more. Tier two support is usually like, hey. How do I handle this escalation? You know, what do I do about, you know, if someone calls in with, you know, a domestic abuse case, things like that. Yeah. There's also tiers above that where people are actually thinking about how do I tune systems? Like, what types of support requests am I getting all the time? And, like, how do I go and update our systems or update our knowledge base to do that better? There are people who are literally like, they've become so good at doing support, and they're, like, product experts now that they are the ones writing the support articles that then fuel the AI AI agents. So so in a sense, like, you know, humans aren't going away, but their job might change. And it might change quite a bit, but I think the stuff that humans still provide is a lot of context. I would imagine that at some point, we're gonna have humans that are just the fact checkers for the AI Yeah. Right, to see when it's going off the rails and and where. If a company's thinking about implementing some kind of AI customer service product, how do they how do they, like, know when that might be happening? Are there flags? Are there anything to be like, hey. This was you know, we don't know exactly how if this is the right way to handle it, we handled it, but, like, you guys should listen to this call. Especially at the enterprise level where there's probably hundreds of thousands of of calls, there's no way to manually review them. Yeah. What are those flags that can tell someone that this might be going off the rails, hallucinating, whatever? There's a lot actually, lot of different things that you can implement. Usually, they fall into a diff a few different buckets. One of them is flags, as you as you mentioned, which is alerting on any conversation that looks bad. And in our product, what we do is we have a bunch of processes that are, like, looking at every single conversation that comes through and checking against many different criteria as to, like, you know, was this person really frustrated? Did we cite internal information? Did we provide an not an article when necessary, etcetera, etcetera? But there's also kind of the in the moment kind of flagging, which you can do, which is when you generate a message, you can actually decide on whether or not to send that message, and you can stop that message and basically be like, hey. This doesn't pass all my criteria. We call them guardrails. That's actually a lot more expensive because you have to wait for your entire message to get generated and for you to look at the entire message. And so it it makes the user experience much worse. Right. And it's a trade off for different enterprises. Some enterprises are very, very tight knit, and other enterprises are like, I would like to see some kind of, like, balance between those two things. Have you had instances or even know of any from other companies where there was just a wrong an egregiously wrong answer to some kind of customer service where they and it either cost the company or whatever. Like, it's gotta happen. Right? It happens a lot. There was a very famous hallucination where someone I think this was a I think this was a, like, a airline. Someone asked for a refund, and the the chatbot was just like, yeah. No. Happy to give you a refund. And they I was they actually didn't have a refund policy for that. They were like, no. Actually, we shouldn't be giving you a refund here, but they went to courts. And the court said, hey. Your chatbot said this. It's on your website. You have to uphold this. And after that happened, the whole industry got a little way tighter on everything that you could potentially hallucinate on. So it was actually a positive thing Yeah. In many respects. Any, you know, common mistakes that you're seeing with companies who have customer support teams who are thinking about doing this, like, what are they? Do you want me to give the you went too fast or you went too slow? Let's just start with it too slow. Too slow. Okay. I I've seen teams spend six to twelve months, like, building out here's what's gonna happen with my chatbot. I'm going to test internally, and I'm going to make sure I test every nook and edge case, and I'm gonna put all these guardrails on there. And I'm gonna make sure that when it comes out, it's gonna be the best thing since sliced bread. And then as soon as they turn it on, it's like someone asked a question that no one has ever thought of. Right? The experts internally who were testing it never thought of the probably most common question. Right. Right. And it's because, like, you're not your users usually. Yeah? And, like, I I think going too slowly can be a real hindrance because you end up building stuff or, like, trying to find solutions to problems that didn't ever exist, and the real problems are out there that you still haven't uncovered yet. So But too fast? Too fast. I mean, a lot of hallucinations I've seen. It's like, if you if you do it it's not hallucinations in the sense of, like, hey. I'm gonna offer you offer you a refund incorrectly. But it's more like, hey. We don't have the ability to understand what is the actual outcome that you should have here. And so there might not actually be a knowledge article or a tool that you can use for any of these things. And if your and if your AI actually goes in multiple times and is like, hey. I don't have this information. I don't have this information. We actually internally think of that as as bad as a hallucination because, like, we can't answer your question. Right? So I think that if you don't get all of the pieces of context and API calls that you might need and actually implement them into your chatbot, then you're probably not gonna get a good result, especially if you're heavy on, like, I need to perform some actions in my stat in your support. That makes sense. Okay. So Assembly's been around seven or so years now, going on on eight, I suppose. Any low lows that you like, things that you think back at the company of just growing pains, or parts where you're like, man, that was a that was a struggle. A lot of it actually had to do with this interplay between our existing product and our new LLM product. And I think at the time, I was just, like, so focused on, like, building in an LLM based product that I didn't put in as much time into, like, how do we bring the company along on this ride? And I think the types of the types of things that our, you know, our team told us, which was like, hey. Like, we just had had our CTO disappear in a tour room. Or it's like, hey. Like, you used to care about this, and you don't care about it anymore. It's like, no. I still care about it, but you might not have the same access to me as as you once did. Yeah. Those are definitely tough times because it was trying to make sure that we were, a, ahead of the curve and, like, building this stuff out because that's, I think, where support's going, but also still making sure that we're bringing the whole company up. And going back, you probably would've done the same thing. You would've just done it with maybe better change management and communication is what you're saying, I think. I think so because I I I to this day, I'm like, I'm so glad we have an AI, like, an AI suite of products. Yeah. It's kind of like the fastest growing part of our business. It's a huge part of where our revenue comes from. I just think that we could've done it better and gotten people bought in more. We see that in just general in companies adopting AI. Right? Everyone thinks it's coming for their job. Yep. You gotta do that change management and say, make sure you're communicating it down, so makes sense. Okay. Last thing, it's twenty thirty. Customer service teams have everyone's been adopting this. Like, what does it look like? Are we is there a handoff? Has it gotten so good that we don't need even that tier three, four to be able to hand off, or are we far enough away that that's not even gonna be a thought by then? I think twenty thirty, you will see very few humans in frontline support. I don't think if you ask a question to a chatbot or you send in an email or even if you call, I don't think the default is gonna be human. I think the default's gonna be an AI. And I think you're only gonna see humans when you actually have, like, a particular issue that requires empathy or an issue where it's like, hey. I want like, humans want product knowledge here or, like, they they want to actively get more information, you're still gonna have all the same mechanics of support. You're still gonna have things that, like, don't make sense in the product, and you're like, what's going on here? Yeah. Right? Because, like, people the reason why support exists is kinda the paper over all of the stuff that doesn't work. Right? So that's always going to exist. It's just that the easy things to paper over that, like, you actually sometimes kind of want an AI to do, that will probably be handled by an AI. And I think humans are gonna be there when you really want, like, the white glove stuff and you really care about that. Like, the four seasons still exists and is a great like, people still book the four seasons because they want the experience. Do you think there's gonna be a shift towards people needing to be better about building the SOPs and the documentation to load in? Because if we get to this point where there's less and less humans, it's all gonna come down to what data we feed the algorithm. Yeah. Right? And right now, you point some bot at confluence, and it's wildly outdated, you know, garbage in, garbage out. Yeah. Do you think that that's going to be a a a larger and larger component as we go on and there's more adoption of these AI tools? I think a hundred percent. I think so. Because even in our own working style, it used to be so hard for me to get someone to write, like, a a product requirements doc or, like, a technical design doc. And now it's just like, no, dude. Here's my temp my Claude code template that writes one for you. Like Yeah. You just need to put in, like, these basic pieces, and it'll write a good one for you probably. And I think it'll become a lot easier. Now is every company gonna do it? That's the one where I'm like, I'm not sure. So I still I still think it's more likely than not that you're gonna default to an AI, but I do think that, you know, things take a long time to change, and that might be where it it takes it takes some time for that to happen. Alright. John Wang, cofounder and CTO at Assembled. Thanks for being here. Love the conversation. Appreciate it. Yeah. Thank you.

How Will AI Transform Customer Support? [Ft John Wang, Assembled]

Customer support exists to paper over product gaps. As AI handles more tier-one resolution, the question isn't whether automation replaces humans, but where human intervention becomes luxury versus necessity. John Wang, Co-founder and CTO at Assembled, outlines the execution challenges leaders overlook when deploying AI support systems.

AI tackles straightforward requests, but high-touch service remains defensible. The Four Seasons commands premium pricing despite automation everywhere else in hospitality because customers pay for human judgment in complex situations. Support will bifurcate the same way. But the real constraint isn't model capability. AI performance is capped by what you feed it. Most companies point agents at outdated Confluence wikis expecting magic. Documentation quality now directly impacts support economics, and teams that treated internal docs as nice-to-have now face a forcing function.

Topics discussed:

- Bifurcation between tier-one AI automation and premium human support

- Four Seasons pricing model applied to customer support economics

- Documentation quality as primary bottleneck for AI support performance

- Outdated Confluence documentation creating AI failure modes

- AI-generated requirement docs reducing documentation friction

- Organizational discipline around SOPs determining AI adoption speed

- Internal documentation shifting to critical AI training infrastructure

Assembled is a San Francisco-based support operations platform founded in 2018, specializing in unifying workforce management with AI-powered automation for customer support teams. The company enables leading brands like Stripe, Robinhood, Etsy, and Salesforce to intelligently blend human agents, BPO partners, and AI agents/copilots across channels such as chat, email, voice, and SMS to deliver precise forecasting, automated scheduling, real-time analytics, and high-quality automated resolutions to scale exceptional customer experiences efficiently.

There was a period of time. I I wanna say it was, like, late twenty twenty three, maybe early twenty twenty four, where, like, my executives are telling me I really need to do AI. So I'm gonna buy literally anything that has AI on it, and the sales cycle ramped up really quickly. Then it was like, wait. That's dumb. Hey. I actually need to buy something reasonable here. And then we saw a a sales spike for, hey. If you have a differentiated approach on AI, not just, like, sticking AI in front of everything, that's when you get the sales cycle. And now I think we're in a place where most people understand what AI is. Most people are looking for kind of how do you best integrate with our systems? Who's going to help me get there the best? And who's gonna provide nuance into how we actually add AI into how we work day to day.

One of the things that I thought was a really interesting use case that you've described is, being able to route it to the right agent for that problem. How is AI getting that information? Is that a admin level, you know, in the platform saying, John's the best person for this, or is it just listening and learning as to which agents handle certain things the best? I think the best AIs do both. I think that on one hand, there's a lot of context in people's brains that actually is very, very helpful. That's why humans do so well in support because they just, like, soak up all this context. Right? But on the other hand, AI systems do well when you can look over a bunch of data and you can, like, ingest it. Or if you can run a system and start ingesting memories and, like, extra context about a person. And so what we do is we actually do both.

Something that people might not know is that most support teams already have built in layers of escalation. So there's, like, tier one support, which oftentimes is the stuff that you like, when you call and you get an Indian or Philippine call center, that's often tier one support and is very, very cheap. But that's not where it stops. There's, like, multiple tiers of escalations above that. Tier two support is usually like, hey. How do I handle this escalation? What do I do if someone calls in with, you know, a domestic abuse case, things like that. There's also tiers above that where people are actually thinking about how do I tune systems. Like, what types of support requests am I getting all the time? And, like, how do I go and update our systems or update our knowledge base to do that better? There are people who are literally like, they've become so good at doing support, and they're, like, product experts now that they are the ones writing the support articles that then fuel the AI AI agents. Humans aren't going away, but their job might change. And it might change quite a bit, but I think the stuff that humans still provide is a lot of context.

If a company's thinking about implementing some kind of AI customer service product, things are there anything to be like, we don't know exactly how if this is the right way to handle it, but, like, you guys should listen to this call. Especially at the enterprise level where there's probably hundreds of thousands of of calls, there's no way to manually review them. What are those flags that can tell someone that this might be going off the rails? Usually, they fall into a diff a few different buckets. One of them is flag, which is alerting on any conversation that looks bad. And in our product, what we do is we have a bunch of processes that are, like, looking at every single conversation that comes through and checking against many different criteria as to, like, you know, was this person really frustrated? Did we cite internal information? But there's also kind of the in the moment kind of flagging, which is when you generate a message, you can actually decide on whether or not to send that message, and you can stop that message and basically be like, hey. This doesn't pass all my criteria. We call them guardrails.

Any, you know, common mistakes that you're seeing with companies who have customer support teams? I I've seen teams spend six to twelve months, like, building out, here's what's gonna happen with my chatbot. I'm going to test internally, and I'm going to make sure I test every nook and edge case, and I'm gonna put all these guardrails on there, and I'm gonna make sure that when it comes out, it's gonna be the best thing since sliced bread. And then as soon as they turn it on, it's like someone asked a question that no one has ever thought. Right? The experts internally who were testing it never thought of the probably most common question. Right. Because like you're not your users usually. I think going too slowly can be a real hindrance because you end up building stuff or, like, trying to find solutions to problems that didn't ever exist, and the real problems are out there that you still haven't uncovered.

Think twenty thirty, you will see very few humans in frontline support. I don't think if you ask a question to a chatbot or you send in an email or even if you call, I don't think the default is gonna be human. I think the default's gonna be an AI. And I think you're only gonna see humans when you actually have, like, a particular issue that requires empathy or an issue where humans want product knowledge here or, like, they they want to actively get more information. But I think that you'll you're still gonna have all the same mechanics of support. You're still gonna have things that, like, don't make sense in the product, and you're like, what's going on here? Right? Because, like, people the reason why support exists is kinda the paper over all of stuff that doesn't work. Right? So that's always going to exist.

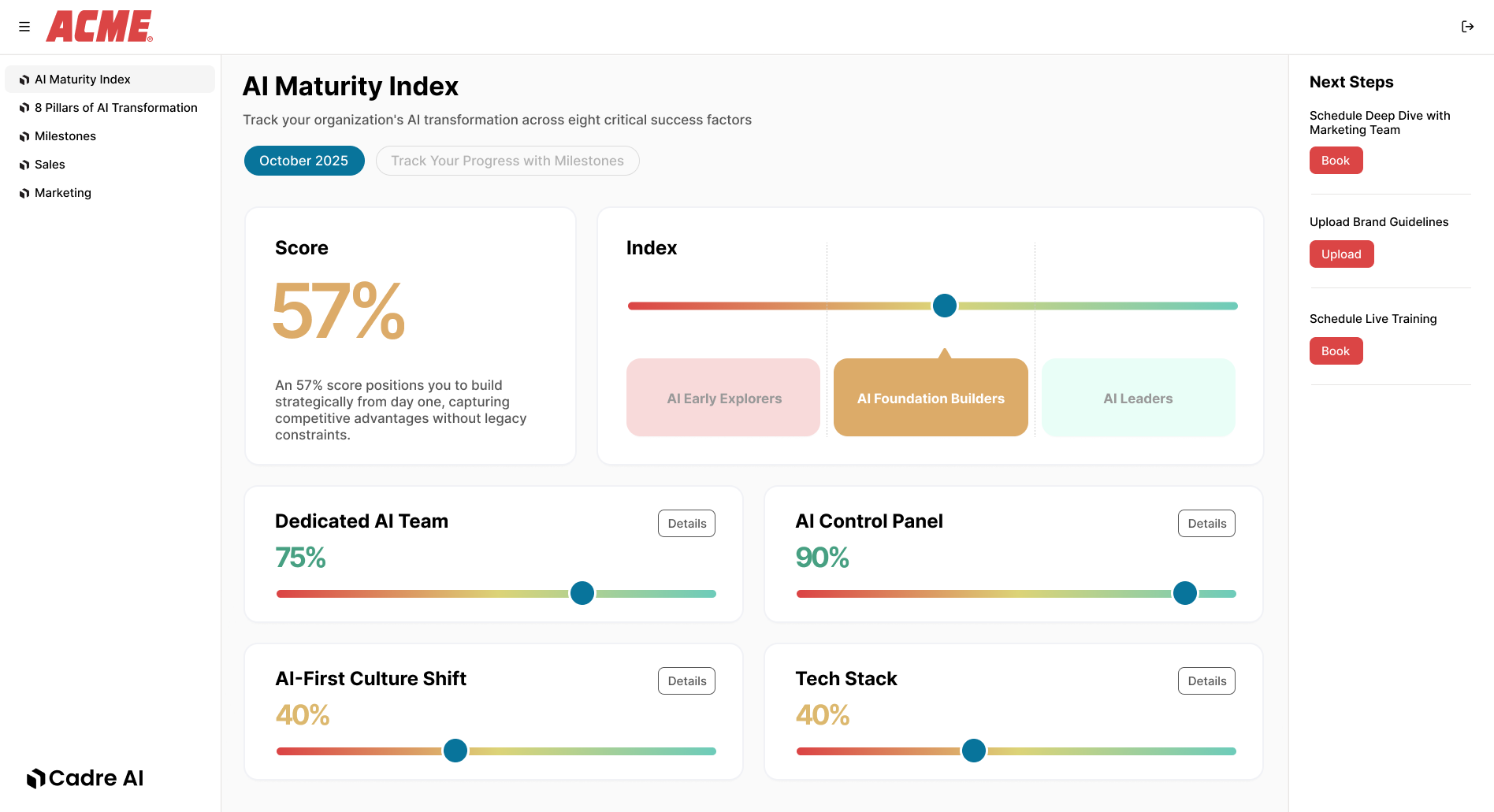

Track your AI results