Why Do 90% of Enterprise AI Implementations Fail? [Ft. Eva Nahari, Former CPO, Vectara]

There's an MIT study twenty twenty five said something like upwards of ninety percent of AI implementations are failing. So hallucinations is like the problem. Accuracy is the blocker. What everyone wants is adoption and ROI. If the answer is coming out of this black box, it's not helping me in my everyday, then I'm gonna go and do what I used to do. So that's my rag. It's actually emerging as the standard enterprise approach to trustworthy generative AI. Fast forward to twenty thirty, what does the world look like? I think, from Rag, it's gonna evolve to agents. Last question I have for you is, do you think the LLM structure is here to stay, or will something better replace it? So we have a CPO of Vectara, Eva Nahari here with us. Welcome. Thank you. I'm honored. I wanna jump right into it because we're gonna do we're gonna talk about a lot of stuff today, but let's jump right because the big conversation we're gonna have is RAG. RAG. Some people know it. Some people don't know what it is. What is it? The acronym is retrieval augmented generation. What does it mean? Well, if you ask a question to, LLM today, a big general model, you get an answer based on what that model has seen and the probability of the order of words. Right? So what is has seen somewhere, and then what's more likely to be the output, simply speaking. RAG is that you actually provided the data you can look at to generate the answer. So it's like taking a test, but you have the book next to you. You can actually go and see the accurate factual data if the book is correct. You know? But you have the raw source source data available in the process of generating the answer. The powers of the human like interaction, but with more reliable foundation of actually trusting the data, especially if it's enterprise. Especially if it's enterprise. So we have I'm sure there's a lot of smaller companies, people that that go, hey. We have a HR person spending most of their day answering questions that are in the handbook. Let's load our p eighty page PDF into a custom GPT and say, answer questions. I would rather keep it outside the custom GPT. Right. Because it can still hallucinate in there. And the more you can force the LLM to generate about what you actually feed it, the context, the more likely it will be accurate. So even training custom models are fragile because it loops in the data with the model, and it's easier to switch out the data. Let's say the book was wrong. Let's say there's new HR policies. You will switch it out. It's easier to decouple the raw data from the model and serve the precise piece of information needed at the point of generation. So RAG is actually emerging as the standard enterprise approach to trustworthy generative AI or agentic. So for companies that are like, hey. AI sucks. It doesn't work because I loaded my eighty page PDF and said, answer the questions, and they're wondering why it's, you know, not reading the full eighty pages before answering, or it's, you know Yeah. It's it's because a a proper rag system isn't in place to be able to say, let's parse this data ahead of time and send the LLM what it actually needs versus an entire eighty page hamper. Yeah. You need to help the AI to be successful, and you need to understand that there's a lot of plumbing and piping around it to get it real and useful. And usefulness and adoption comes from accuracy. If the answer is coming out of this black box, it's not helping me in my everyday, then I'm gonna go and do what I used to do. Right? Right. So the the accuracy is more important than you think even in a simple everyday day to day context. There's a there's an MIT study twenty twenty five said something like upwards of ninety percent of AI implementations are failing. Yep. What does failing mean? It doesn't mean that they're not finishing them. It's that they're not seeing the value out of it. They're not replacing the thing the manual process it was supposed to. Yes. Right? And that comes down to garbage in, garbage out in a lot of cases. Garbage in or great things in but still garbage out Or skills, not understanding what it takes to get it right. But in the end, it's the garbage out that prevents adoption, that prevents that going to production and scale it. If no one is using the system, why would you roll it out? Right? What size companies are using Rags today? Are they is it largely is it mostly the enterprise level? You know, I have a slightly biased view there, I'm aware, because I only work with enterprises. So I don't know what the SMBs are using. Right. I think enterprises have landed on Rag because of the increased accuracy, but also the separation of data so they can follow compliance. They can show the trace that this data was involved in this workflow. Many times, enterprises big enterprises are under regulatory requirements to show where an answer or, an offer that you send to an insurance client needs to follow certain standards and rules. It it can't be biased and skewed towards a certain age or a certain ethnic background. Right? It's a lot of rules and policies you need to follow. And separating the data from the model makes that process easier in regulated industries. I remember, I think it was the Georgia Institute of Technology had that automated Glank. Right? The self driving car thing. Do you wanna tell me? If I recall it correctly, it was trained over data and then put into production, and then it kind of disregarded people of color, especially dark at night in that It's like a five driving car scenario. And that is, like yeah. You have to very much look at the data what goes in if you train a model. But if you just train the model to generate language, and then you yourself are responsible for the data in at any point in time, I think that simplifies those scenarios and increase not only the accuracy, but the control of the dataset if it needs to change. And that will address biased situations better Right. Than if one team trained a model a certain way and then later discovers that it was completely skewed. So you said one team trains the model. That's interesting. So are there instances where large enterprise companies have multiple rag models across the business? I think that is a growing problem. It's easy to stitch a little hobby rag together, and many teams without a big, large enterprise have done that. And the POC stage is beautiful. It's like, oh, I my skills. It's exciting. It's new technology. Like, I've been there. I'm also excited. But then when you start rolling it out at scale and you have to you have to show transparency for audit trails. You need to cover citations, or you need to have fine grained access control handled better, or, you just can't get the retrieval process right. You really need to fine tune how you retrieve pieces and what pieces are more relevant for the task. It suddenly starts becoming hard. But in POC land, it's easy or challenge enough, you know Yeah. But fun to do. And then the hard part starts when you go in production. But, therefore, you see this fun, exciting teams all over the place building their own rags, and suddenly you have a rag sprawl. And IT goes like Yeah. And we can't even scale one because we haven't figured out the info security yet. The data governance. Oh my goodness. They get getting gray hair as we speak. Right? So it it's become a rag sprawl this year, and I'm kind of seeing it shifting just terminology, but same or maybe ten x worse, which is agent sprawl. Now you have these little self driving functions to go and actually do transactions for you. Yeah. Do you trust them? How do you control it? Where is the data trace? Where is the governance? Like, I I'm just looking at twenty twenty six. It's like, okay. It's gonna be ten x worse. Yeah. You have a a long background talking and thinking about this stuff. You started as a software engineer, and you actually wrote your master's thesis in twenty two thousand two? Oh, now you're dating me. But okay. Yes. Two thousand two in reinforcement learning. It was super cool technology back then. Still is. But, you know, yeah, I was in AI before it was cool. Before it was cool. Yeah. And then nine years at Cloudera. Yes. Right? And that was during the big data boom. What any lessons you took away from that that you're able to apply today? Every day. Like, first, the enterprise empathy. Like, it when when you were a let's say you were a small startup. It's like, oh, I built this great thing. You just have to move all your data into this structure and in my place and do it the way I have envisioned it. Right? That's not reality. Enterprises are messy. They have data from, like, twenty different acquisitions or more. They have legacy systems like mainframes. So, like, think about that. There's so many different systems that you have to nicely coexist with or integrate with or or see the bigger picture of what rules, processes, and regulations in enterprise need to meet. Even just to acquire a piece of software is a whole new journey for them. So, like, all these pieces of empathy, I apply every day, but with my enthusiastic startup, you know, in the backing. And it it's it's a journey. So that's I think if I have to summarize everything I've learned at Cloudera, which is a lifetime, it feels like Yeah. Every stage was different too. But in the stage we're in, it's it's about building the trust to take the journey together and understanding the pains and the situation that enterprise is in at that point in time and guide them how they safely can adopt this, safely can scale it in a partnership, and also make it successful together, not just close the deal and leave like many companies. We're talking about enterprise, and you're talking about the level of complexity of the data and the antiquated stuff. I've I've been in companies that are, you know, a thousand employees, and I look at our confluence, and you got AI pointing at it where you could ask it any question. I'm looking going, there's a million outdated policies in here that we've never cleaned up. How is AI gonna know that this is not out that this is outdated and not give somebody the wrong answer? Data management and data governance with this gen I Gen AI era out of the closet is gonna be 10x more important. That's another thing from the big data era is, like, some people just threw every set of data in this data lake, and then you were in a data swap. Right? The ones who had a clear business goal, clear ROI anticipations, and then took precaution to get the right data in to meet that business objective, they were successful and could scale and repeat. With the right architecture to be able to connect, right, as as they grow. Yeah. Because you get a company that's I mean, the whole idea is to be able to scale more with AI so it was a allow you to scale more without more headcount or more overhead. Exactly. You're gonna if it's gonna help and you're gonna scale, You also need to make sure you have the people that are cleaning the data to ensure that what you're feeding in is accurate. Right? And I think I think it's an upfront friction, upfront heavy lift to then just spin out three hundred use cases. And that's what we see with our customers is, like, you start with three, and then a few months later, it's twenty five. Few months later, it's three hundred. So it's it's if you get it right and you see, it's not just talk. Like, if you see the results, then it's easier to get the next projects running and more more support Yeah. Through the organization. I spent five or six years in house in portfolio operations in private equity, and I know you were four years or so in venture capital. Yes. Quite different in terms of the the, you know, portfolio companies that they're investing in And everything. But you've during your time, you invested in you saw fourteen AI companies you invested in. Think seven. Thousand. Probably thousands of of sims Yeah. That you, you know, disregard or whatever. Like, what did you what are you taking away from that time to be able to apply to today? First of all, there's a big hype that has kind of fueled itself, and it's like the snowball effect. So we're in, like, super hype now on what AI can do and cannot do and what it can produce immediately. While on the other hand, like, enterprises have it's gotten more and more complicated to make a selection. Like, there was, like, six hundred and fifty startups in the quarter with, like, AgenTek in it some quarters ago. Right? It's it's an overwhelming speed, noise, and uncertainty. Guess what enterprises do? They take a step back and, like, okay. We need to think this through. So sales cycles have slowed down. Yeah. And then the pressure from the board is like, you have to get into AI or else will fall behind. There's a very high tension in the market. There's a VC hype, startup Kool Aid, enterprise slowdown, hesitation, fear, and fear of falling behind, fear of making the wrong choice, fear of everything. Like, that that doesn't make sense equation wise to a mathematician like me, or at least wanna be mathematician. But, I think it leaves this interesting taste of, like, okay. To really navigate this four year chaos that I was part of on one side of the table and then now on this one is focus on what's real. Focus on real business values. Focus on delivering results with the customer. Focus on the customer's needs. Help them guide through the journey. And then all the marketing noise will implode on itself eventually. That's at least where I'm, like, be level headed, be sober someone needs to be sober in this context. I think there's another layer too in in terms, like, in the gap between, you know, the the VCs hyping it, the the AI startups selling it. You've got this, also, this middle layer before you get to enterprise that I've seen of the the PE side where I can't tell you a PE board meeting I've been in in the last four years where there hasn't been some talk Yeah. Of AI. And then now, like, maybe not four, maybe two, but now it's like and what we're hearing from our companies, which customers at at CADRE, a lot of them are private equity backed, and they're saying it's coming up in every. They're like, what is your AI strategy? Yes. And so there's this, like, this there's this hype that's going on of agents are here. They can do whatever you need. Right? And they're just like an employee that you train and you let them go. And then they wipe out your hard drive. Right. Or I mean, you think about even just like a a simple prompt that, like, people are going they're given a very simple prompt and saying, why am I not getting the the best results? It's like same thing as an employee comes in. You can give them one sentence and say and expect them to do their job well. Like, you gotta give them context. You gotta give them SOPs. You gotta say this is what we expect from you. I think that's if we're looking at next year, it's gonna be more about how do you provide the right context to the agents, how do you put guardrails in place, how do you guide them to get back on the track if they're slightly going off Off the trail? And we talked about hallucinations maybe a little bit, like generative models are very creative. Yeah. They're incentivized to come up with context and and sentences and and things. But, really, for the enterprise workload, it needs to be the opposite. Don't be creative. Right. Be consistent. Be, you know, truth grounded And then all these guardrails that needs to also protect the agentic, which then also can hallucinate, by the way. So even in the first step of a multi agent workflow, the first step can go a little wrong. But guess what happens if you, like, pick Keeps going. Right? Yeah. So it's ten x down the road. Yeah. Very, very bad decision. Right? So you have to dynamically course direct, and this is something I'm passionate about, is the real time covenants. There's a new concept that's come up that's called guardian agents k. That can kind of evaluate tool choices, or should we take path a or b? But you missed out c. Like, what what does make sense here? So some kind of feedback loop on course directing agents as they go that I mean by real time governance. You govern the agents in the right direction. Have you seen instances where and I'm only I'm asking because I've I've heard this to be the case, but I haven't seen it, like, in true application of using two agents with one, you're saying your job is to do this thing, and the other one to say your job is to poke holes in that thing Yes. And feed it. So essentially, to say there's gotta be a human in the loop that's gonna ultimately look at this and say, is this the right answer that we got? But can you successfully use agents to kind of correct each other before it gets to that human? Yes. You can if you have insight in every step of the pipeline. So that's why I believe building an end to end rag platform or a genetic rag platform is is the key, because then you can not only know what the truth is or the intent of the query is. You intent logging is another thing I think is gonna start popping up as something. You you need to understand the context, but also the intention of this workflow. And the more you context and along with intention, you can provide and guide and course direct the genetic workflow along the way, the better outcome. I think hallucinations will always happen. Yeah. But having human in the loop is one way. But now when an agent are gonna decide what tools to use and what path to take, you can't it's actually inefficient to have a human step along the way. Then it's almost more efficient to have the human do everything. Then you don't get the automation efficiency. Right? So you need something at those steps too. It's almost like I wanna turn it around, like agent in the loop. You have the human workflow, but then you have an agent helping with the compliance checks that's, like, tedious and aggregating information and asking questions. And then you continue, and then you do this step. But the agents themselves also needs this guardrail to to really get it right. Well, they say, like, if you're thinking about a a workflow and it's, like, all manual, next version of it is, let's just let's get some, like humans monitoring it. They're dropping something into a, whatever it is, custom GPT, and they're it's helping, and they're taking it out. Work that until it's working really well, automate it. Then you can maybe move to agents, and then maybe you're moving to this full system. You're working with businesses that are in that full. Like, they're ready to build that full system right off the bat. So hallucinations, as we mentioned, are probably the biggest concern that any business is gonna have is, are we going to we can't monitor every answer of what this is telling our employees or Can we? Can we? So so here's I guess the question comes down to how should they be thinking about monitoring the accuracy Yep. Of these workflows they put in place? So hallucinations is, like, the problem. Right? Accuracy is the blocker. What everyone wants is adoption and ROI. Right? So it's all tied back into this problem of hallucinations, not just in incorrect data being fed into the pipeline. The model being trained incorrectly if you do it yourself or, like, hallucination generating answers that aren't backed in data. But, also, the retrieval step could be a problem if you get the, nonrelevant pieces of information in a ragpipe planning event. So it's accuracy is complex. I'm trying to to share that it's, like, multidimensional. You need to look at the data, and you need to look at every step of that ragpipe. Like, retrieval is not easy. Right? So you you can't just have a vector database match. You need to put some kind of semantic understanding or hybrid search in there to get a relevance. What should I send? Then the model choice will be another step, and then detecting if the output is still hallucinating based on what was sent to the LLM. Right? But we can course direct that automatically. You don't have to have a human in the loop. Most enterprises want a human in the loop to begin with, to build the trust. But over time, if they can see the system actually course directing where there are faults using guardian agents or some other guardrails, right, you can take more and more step back. That's why I speak of it as a journey. It's not overnight. Yeah. You have to learn a lot about this. You have to prepare each step in the right way. There needs to be people management, like change management involved. There needs to be time to wrap their heads around how to interact with this system that's supposedly gonna help them. It's a journey. So you can't have expectation, like, put the button and chat GPT will solve everything for me if you're gonna solve enterprise workforce. It is a journey. It needs a lens and deep dive in each step of that journey, but then when you get it right, you get scale massively. Yeah. But even if you had the stakeholder level, call it c suite or VP level or an enterprise saying, yes, we understand this is a journey. Yeah. If they're not doing the proper change management and communication down, the peep the people on their team that are using it, there's probably still gonna be that expectation of, I pressed the button. I'm I've always done it this other way. Yeah. I pressed this button Yeah. Didn't get the answer Yeah. Sucks. I'm doing this other way. I'm going back to the original way. What I've what we've learned with our customers is that there's always a deployment phase, implementation phase, and then there's a rollout phase. And that could look very different depending on which industry or company you work with, but you let people give their feedback. You listen to people like, does this work? And you take that very seriously. If this system, this AI system doesn't do 10x, what the person will do to help them do their job Yeah. There will be a lot of double work. You have to build the trust, and trust comes by listening and getting the feedback and working that into the journey or process. Yeah. Then they will see the results. Getting into a little bit of the details, I'm I'm curious to about the hallucination side of how do you like, why does AI hallucinate? You said it's It's built to be, you know, inventive. That's part of it. But then there's also different types of what we might call hallucinations, which may just be, like, a concatenation error between data. Right, and others. There's a lot of reasons I would imagine that the answer could be wrong or start veering off along the way. There are many reasons. Even, like, as I mentioned in the agentic world where tools are selected, now they're picking the wrong tools. It's not hallucination the way we have gotten to know it, but it is. It's like choosing, making a choice that is not aligned with the intent. Right? So there are many faults that can happen, and they have different nuances of flavors. But the important thing is to catch them before you get to action. Right? So catching them, detecting them, and you can compare a and b. And having a model that says, I'm not sure. I found two sources. Give it transparency and not the confidence that the big general models have been trained to have. Right. People, we should reflect on us more. Like, we come and say, like, hey. I found two sources. Which one? We check-in with our leadership or manager, hopefully, if you work in a good company. But, like, you you double check with other people if you can't get forward, and the model should treat you as such. Like, okay. I don't know which choice to make here. Can you guide me? Right. Can you provide more context? And that part has been improved a little bit, but, like, it's I'm talking about the agentic interaction. But it's it's very hard to change these big models to have that. It requires a lot of retraining. It's just the nature of of the chattypities of the world to be very confident. Yeah. And, like, I can do this. Yeah. But did I give you permission to? No. Oh, sorry. Sorry. But that doesn't mean anything when your hard drive is gone. Right. Okay. So you've had this long history in the space AI before AI was cool. Here you are at Vectar. Like, why? What's exciting about what this company is doing that gets you up every day and eager to go in? So I think I'm I'm gonna reflect back on different chapters of my life to give you the answer. The first chapter was, like, engineering innovation. AI before anyone talked about it. I was like, this is the thing that will change the world, and I was very naive but very happy. And I I I tried to make it happen. Like, in the end, I was implementing it into Java Virtual Machine, like, infrastructure technology. At least I got that. But the world wasn't ready. And then my track took me to the next chapter of infrastructure. So I built a lot of infrastructure for many, many years. I'm not gonna tell you how many. But there, I fell in love with the customer. Like, really, from technology to actually building value, changing something for the world at Cloudera's days, but, like, also for the customer. Like, they're trying to achieve a next goal, and it's so important for all the people working around that. I wanna help them. Right? And then the pandemic happened, and I had a crisis. And then I'm, okay. I can do this product and go to market in my sleep. I felt a little bit demotivated there, mostly the pandemic's fault. But, I accidentally ended up in BC. I was needed something new, something completely different. Yeah. And I thought it was very exciting to build many companies instead of one. Right? I wanted to share all this experience I have with distributed data and real machine learning and NLP in production. Now it's happening. And then I came to VC and, like, one month in, give or take, chatty fatigue. I was like, oh my goodness. Now it's really happening that the it's out of the closet. People see what I saw. You know, I've been talking about NLP and how we need to use Word2Vec was the thing back then. But, like, I really saw how natural language can change how we interact with systems and unlocking from, like, looking at screens. Right? We need to come back to some more interesting interaction with systems that does things for us. That was my original passion back in the game. And VC was amazing. It taught me a lot of empathy for founders and other VCs, and it's a hard hard job. But after four years, I watched the world go down this track of, oh, let's just adopt it. And not understanding how bad it can go from all my reinforcement learning, like, even twenty years ago. Yeah. Like, it can go really bad because the AI is just limited to what you provide. And you have to guide it the right way. And then Vectara is, like, what I believe is building the the infrastructure and the almost like an agent operating system to protect the applications running from doing the wrong thing. And that's what I'm passionate about today. It's like I wake up in the morning. I wanna go and help enterprises doing this the right way based on everything I know. Right? And the team is awesome. Like, team, number one. Yeah. Passion, number two, three, the approach we do. Yeah. Love to hear it. Let's say we get rag right. Everyone gets rag right. Fast forward to twenty thirty. What does the world look like? What's different? Well, then my job is done. No. I think, from RAG, it's gonna evolve to agents, which is not just fetching their data into your controlled resources. But you're gonna see agents fetching data from any SaaS, ABI, like Salesforce, NetSuite, what have you. I'm old school. Those are Like, it's Through traditional MCPs, do you think, or do you think MCPs are not gonna I mean, MCP is the thing now, and that in itself is gonna create chaos and access security issues for quite some time. But that might evolve. There might come other standards. But the thing is, like, all this data retrieval from different places, and then you're gonna exchange credits or credentials or doing something on behalf of someone although that person doesn't have access to Salesforce or like, how are you gonna figure that out? Right. I think that is the next phase of all this, the chaos of data retrieval from different systems and making sure it got the right data at the right time with the right purpose, the intent again. And that person was allowed to access that data. Spot on. Right. So we're gonna face you say what we're looking forward to, hopefully, a lot of fantastic ROI, thanks to proper rag, but the chaos is gonna continue. There's gonna be a lot of room for new innovations, new start ups, but, hopefully, enterprises will have learned more through this first journey of RAC. Last question I have for you is, do you think the LLM structure is here to stay, or will something better replace it? I think LLMs will be here for quite some time now. It's like the new database. And, I mean, I squinted my ass when I said that, but, like, that's the way you access data from now on, I think. Will there be new algorithms how to make it more efficient? Yes. Probably. Hopefully. AI research must continue. And will there be new innovation around getting it more and more right? Yes. That's the mission I'm on. So I bet hundreds of people, and I encourage others to kinda take this on because then the overall goal will be met. Will there be new architectures? Like, AgenTic is still forming. I think there will be new jobs Yeah. Like agent designer, agent developer, agent administrator, or orchestrator, something there. Maybe agent architect. I mean, that's the new Quality assurance. Right? Isn't it kinda crazy that, like Yes. It's it's Quality assurance. You know? Yes. There's some things that we Fact checkers. Yeah. There's some things that we think AI is going to be is, like, great at now that can replace humans doing this. We might have a full circle moment where we have to hire so many humans in the future to fact check the AI. Fact checkers. Right? They're all gonna come back. It's gonna be a resurgence. Yeah. One of my customers told me that their son in school are getting to use ChatGPT as homework thing. Yeah. They have a question. They get the answer, and then they're gonna fact check everything. That's their homework. So the world is already changing. So we're building quality assurance specialists Yes. In hyper built settings. A good thing if we're gonna go down and trust AI. Yeah. Like, I'm trying to build it automatically so we don't have to. Good. Well, let's hope you succeed. That would be nice if Hope so. Yeah. Alright. Ibn Ahari, CPO at Vectara. Thank you so much for being here. Appreciate it. Thank you.

Why Do 90% of Enterprise AI Implementations Fail? [Ft. Eva Nahari, Former CPO, Vectara]

RAG isn't just another AI buzzword, it's the architectural foundation that determines whether enterprise AI delivers value or burns budget. Eva Nahari, former Chief Product Officer at Vectara and four-year venture investor, explains why separating data from models matters more than the models themselves, and why 90% of AI implementations fail at the execution layer, not the technology layer.

The standard approach, dumping an 80-page PDF into a custom GPT, fails because accuracy requires proper data architecture, not better prompts. RAG addresses this by feeding models precise context rather than expecting them to ingest everything at once. But implementation creates new problems: multiple teams building isolated RAG systems across the same enterprise, creating governance nightmares when those hobby projects need to scale. The companies succeeding aren't the ones with the best AI talent, they're the ones who treated data management seriously before the AI hype arrived.

Topics Discussed:

- RAG architecture separating data from models for compliance traceability

- Retrieval quality as the primary bottleneck before generation accuracy

- RAG sprawl problem from independent team implementations across enterprises

- Real-time governance systems using guardian agents for multi-step workflows

- Intent logging requirements for auditing agentic decision paths

- Agent-in-the-loop pattern replacing human-in-the-loop for workflow efficiency

- Documentation quality emerging as critical AI infrastructure investment

- MCP standard adoption for cross-system data retrieval and access control

Vectara developed an integrated AI Assistant/Agent solution which focuses on enterprise readiness, especially when it comes to: Accuracy (eliminating "Hallucinations"), explainability of results/actions, and secure access control. More technically, under the hood Vectara provides a serverless end-to-end Retrieval-Augmented-Generation (RAG for short) platform which combines multi-lingual hybrid (semantic+lexical) information retrieval with AI-generated responses/actions while giving developers the optionality to optimize its behavior vs messing around with its guts (analogous to giving a database a hint to change join strategy versus changing the join algorithm manually).

So Rag is actually emerging as the standard enterprise approach to trustworthy generative AI or agentic these days. So for companies that are like, hey. AI sucks. It doesn't work because I loaded my eighty page PDF and said, answer the questions, and they're wondering why it's, you know, not reading the full eighty pages before answering or it's, you know Yeah. It's reading. Right? It's because a a proper rag system isn't in place to be able to say, let's parse this data ahead of time and send the LLM what it actually needs versus an entire ADP channel. Yeah. You need to help the AI to be successful.

There's an MIT study twenty twenty five that said something like upwards of ninety percent of AI implementations are failing. What does failing mean? It doesn't mean that they're not finishing them. It's that they're not seeing the value out of it. They're not replacing the thing the manual process it was supposed to. Yes. And that comes down to garbage in, garbage out in a lot of cases. Garbage in or great things in but still garbage out or skills, not understanding what it takes to get it right. But in the end, it's the garbage out that prevents adoption, that prevents that, going to production and scale it.

First of all, there's a big hype that has kind of fueled itself, and it's like the snowball effect. So we're in, like, super hype now on what AI can do and cannot do and what it can produce immediately. While on the other hand, like enterprises have it's gotten more and more complicated to make a selection. Like, there was, like, six hundred and fifty startups in a quarter with, like, AgenTek in it Some quarters ago. Right? It's it's an overwhelming speed noise and uncertainty. K. Guess what enterprises do? They take a step back and, like, okay. We need to think this through. So sales cycles have slowed down. Yeah. And then the pressure from the board is like, you have to get into AI or else we'll fall behind.

There's a new concept that's come up that's called guardian agents K. That can kind of evaluate tool choices or should we take path a or b, but you missed out c. Like, what what makes sense here? So it's some kind of feedback loop on course directing agents as they go that I mean by real time governance. You govern the agents in the right direction.

What we've learned with our customers is that there's always a deployment phase, implementation phase, and then there's a rollout phase. And that could look very different depending on which industry or company you work with. But you let people give their feedback. You listen to people like, does this work? And you take that very seriously. If this system, this AI system doesn't do 10x what the person will do to help them do their job, there will be a lot of double work. You have to build the trust, and trust comes by listening and getting the feedback and working that into the journey or process.

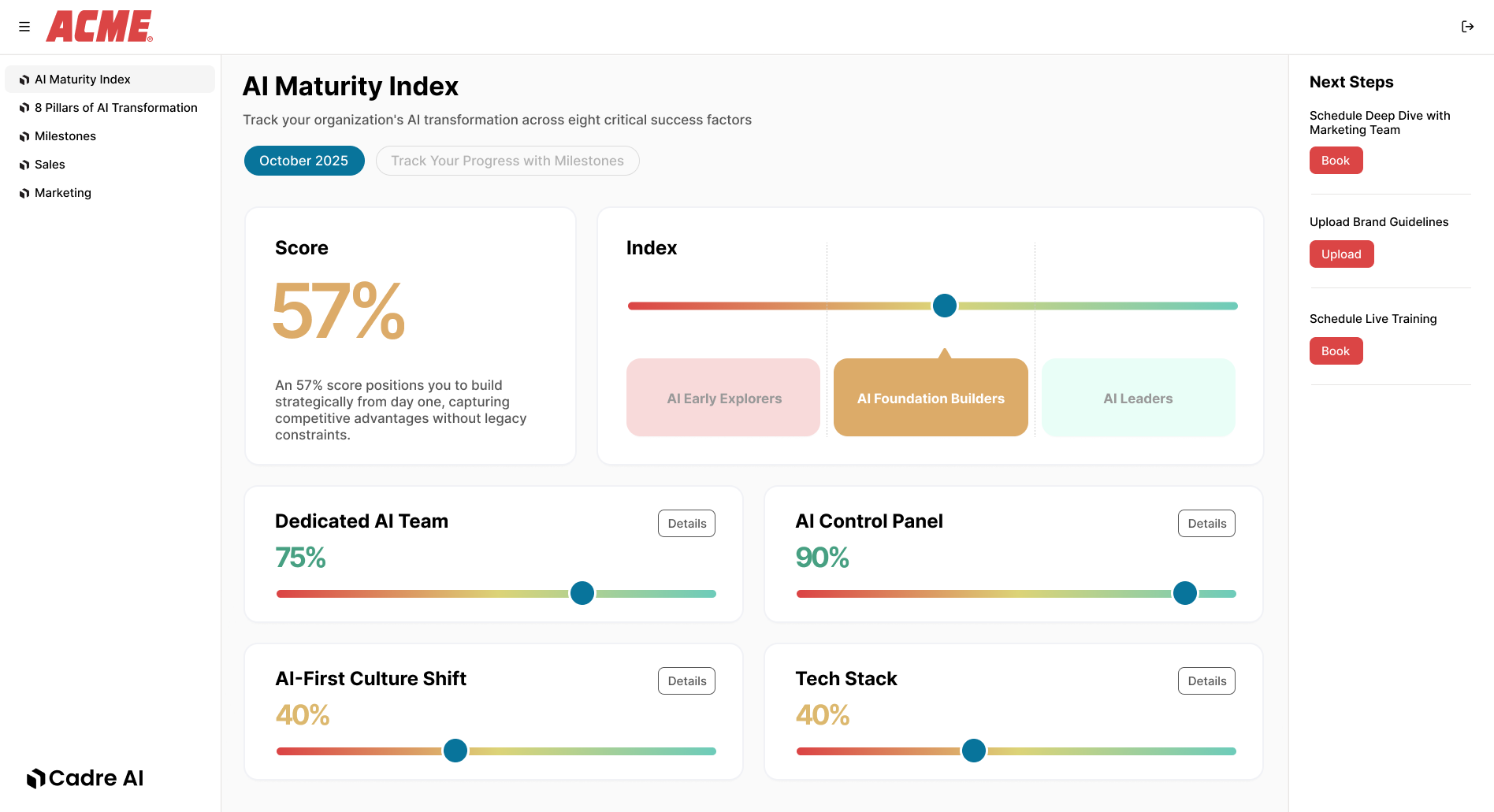

Track your AI results